What is a prompt, and what is its value?

In your agent, you can configure prompts (think directives) for how your agent can take your goal and transform it into instructions.

“Go do work” is a bad prompt.

“Go fix that class file by fixing the dependencies in the XYZ project” is a good prompt.

One is wide-open, making it hard to interpret, and the other is specific and goal-oriented.

Just like people who are all craving great prompts, so do AI agents.

The characteristics of a good prompt should always be;

Clarity and Specificity – no confusion, stay on point.

Context and Constraints – set the tone, boundaries, and scope

Consistency at Scale – can you reuse them at scale for others?

Cost efficiency – well-built prompts can reduce API calls, reducing token usage and iteration – when building a high-volume agent, this matters.

Domain Adaptation – the prompt should be able to bridge the gap from a general LLM (Language Learning Model) to your product.

I am by no means a “Prompt Engineer”, so when I was looking at my agent, I decided to go to the master to see what it thought of my prompts.

Here is what Claude came back with;

That feels a bit harsh that yours could be that much better, but let’s see what we can do.

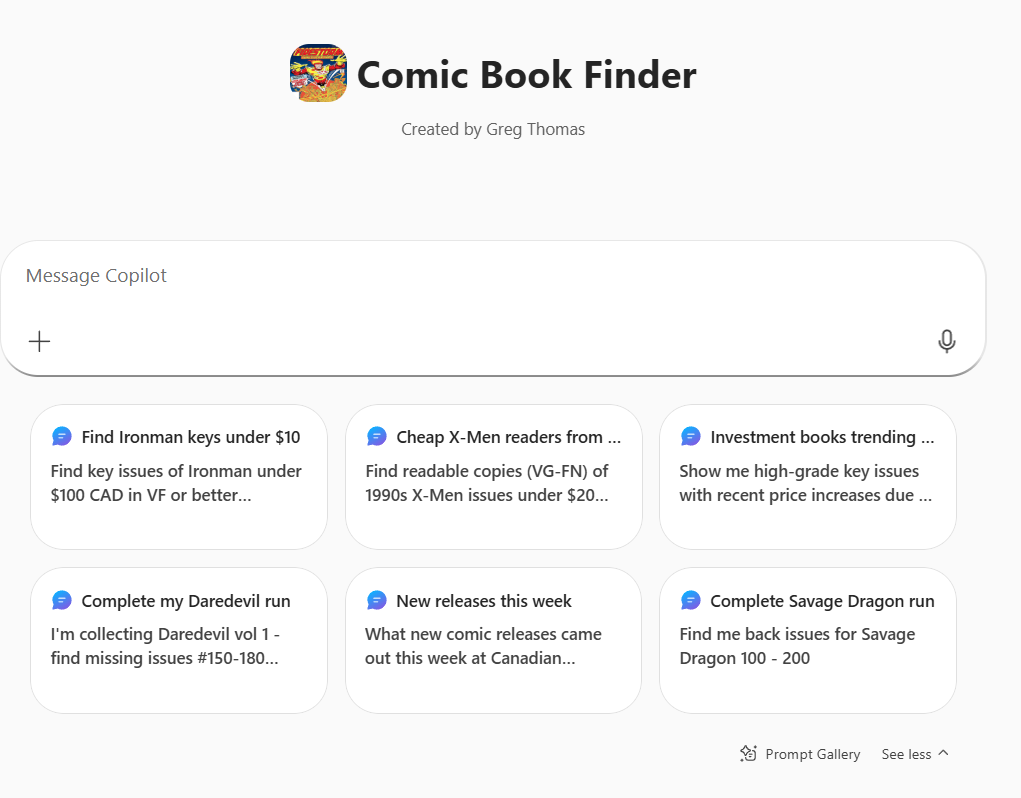

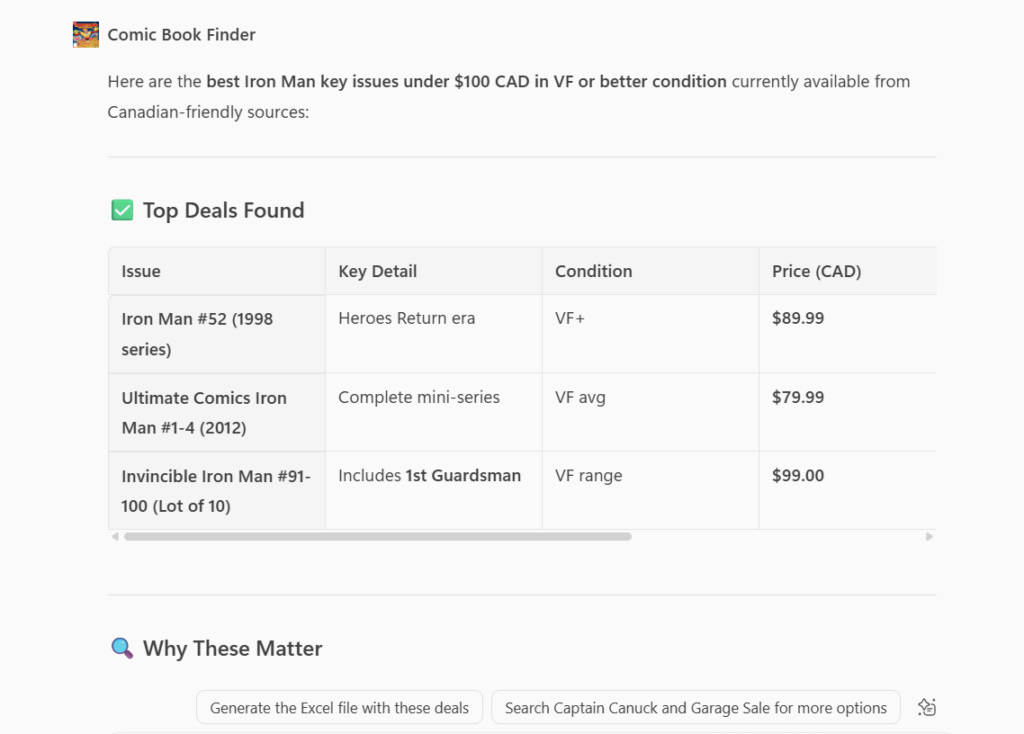

So with Claude’s help, I created some new prompts. For anyone now using the agent, they can easily click these prompts to find what they are looking for.

It’s hard to determine the scale and efficiency of the prompts since it’s only me using it. For the very constrained prompts, the information wouldn’t change, but for the more wide-open ones (i.e., new releases this week), I feel like there is some strong value here.

In the end, your prompts are what will define what you’re trying to do and what you are going to get out of it – so don’t ignore them and do them as “vague” as I did in my first iteration.