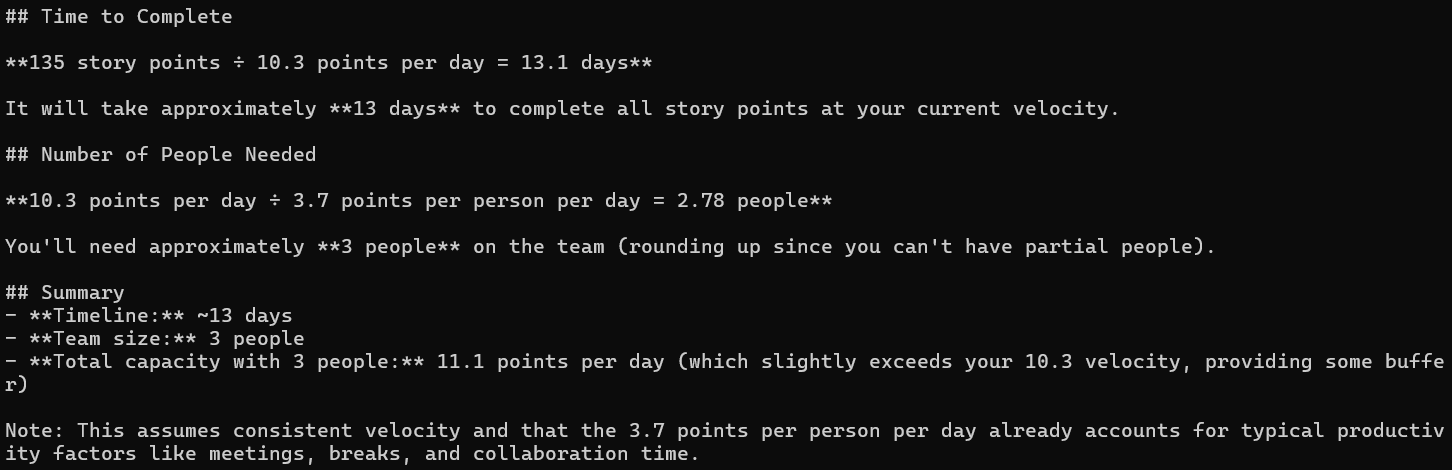

A while ago, I was playing around with Deepseek as I was intrigued by what it gave back to you when you sent it a prompt. Now, I’ve started with Claude.ai in trying to figure out a few upcoming projects, so I wanted to go through the steps of sending some prompts its way.

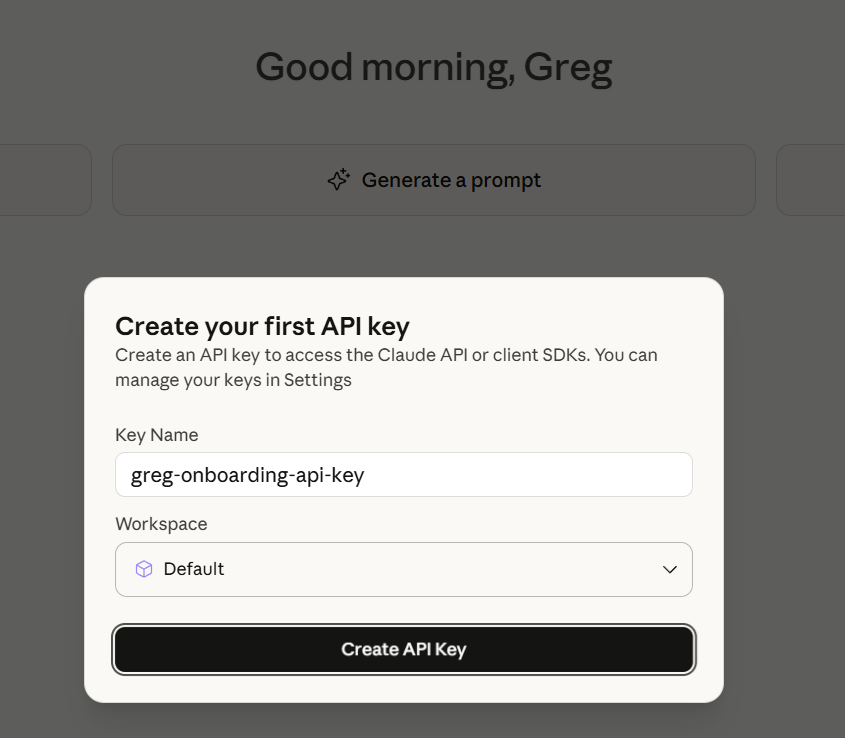

Getting your API Key

Getting set up with an API key is pretty straightforward. Head over to https://console.anthropic.com/ and follow the steps to create your key.

Similar to Deepseek and all AI models, you’ll have to add some credits to your account for queries – Claude does this in increments of $5.

The Code

The code to work with Claude is pretty much the same as Deepseek, with the configuration of model, tokens, messages, and roles. If there is anything that is coming out of AI, we might finally be getting to the part of coding that we have waited so long for, where we have consistent interfaces across implementations.

var requestBody = new

{

model = "claude-opus-4-1-20250805", // or other model strings

max_tokens = 1000,

messages = new[]

{

new { role = "user", content = prompt }

}

};

var json = JsonSerializer.Serialize(requestBody);

var content = new StringContent(json, Encoding.UTF8, "application/json");

var response = await _httpClient.PostAsync(

"https://api.anthropic.com/v1/messages",

content

);

response.EnsureSuccessStatusCode();

var responseBody = await response.Content.ReadAsStringAsync();

var result = JsonSerializer.Deserialize<JsonElement>(responseBody);

return result.GetProperty("content")[0].GetProperty("text").GetString();The Prompt

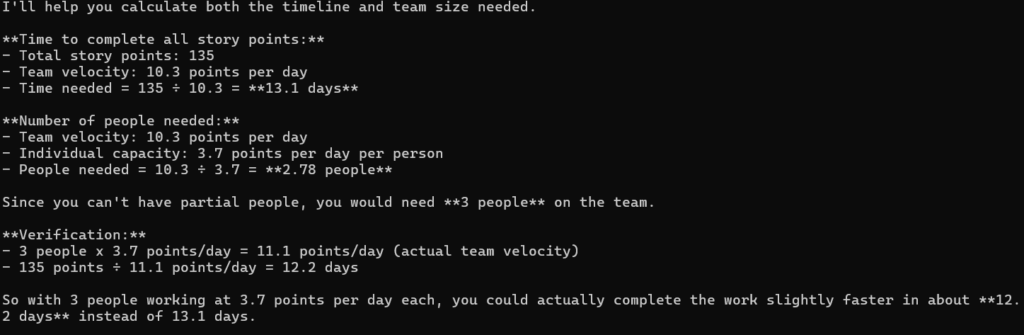

I wanted to keep my message to Claude similar to what I did with Deepseek to see what the results would be.

Opus 4.1 (claude-opus-4-1-20250805)

The latest and greatest for complex challenges and advanced reasoning.

Opus 4.0 (claude-opus-4-20250514)

The last generation of Opus, similar to Opus 4.1, for advanced reasoning and complex problems.

Sonnet 4 (claude-sonnet-4-20250514)

A solid balance of capabilities and speed.

Why do this? What’s the point?

Granted, my problem isn’t rocket science, but as I and many, many others work to integrate AI into our workflow, now more than ever, it’s important to understand what is happening under the hood. Because, like anything, the people who understand what is happening underneath will be the ones to make the most of it going forward.

- Different AI models are like talking to different people; they might all give you the same answer, but they are arriving at it from different angles.

- The code, the inputs are the same, just like when dealing with people.

- Our reasoning choices shift, just like people.

- The code to get at the data, the place where we used to build our success from will be greatly simplified going forward, and the following will be what you can do with this data and how you can leverage it.

A few months ago, I spent a week deep-diving into working with lovable.ai. It was an interesting detour and definitely showed the allure of Vibe Coding. As a developer, I found it interesting, but frustrating, and could see why complete non-developers would be salivating – they can see their ideas come to light, even if they had to repeat their instructions a few times.

Is AI doing things differently, not entirely? Is it changing how we go about doing things in order to get them done?

Completely.

When the code is the same, how do you differentiate your work?