I had a requirement a few months ago where I needed to take a freshly uploaded Excel file from Power Apps, parse it, and create a bunch of records in Dataverse related to that Excel file.

Easy right? Thinking back to the days when I would do this with C# and the Excel API, I thought I’d be uploading the file, cracking open the stream, browsing through it, and finishing mighty quick.

But as I found out there are a number of gotchas at play here.

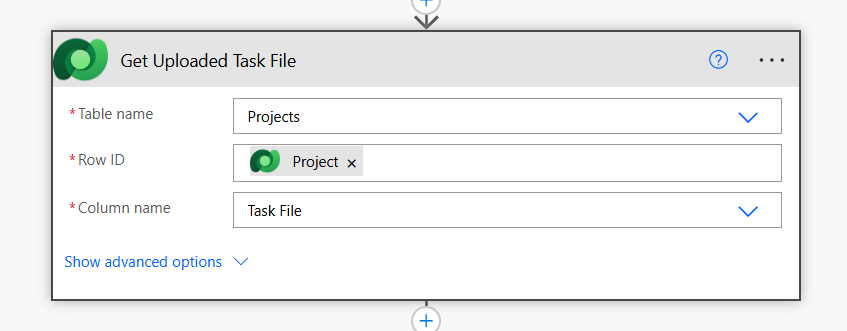

Accessing your Uploaded File

When accessing a file, you don’t need to go through all the hurdles of going through the file table and searching for your referenced attachment. Instead, you use the “Download a file or an image” action, point it to your entity, and then watch as Power Automate loads the available columns to do this.

At this point, you have a stream of all your data that was just uploaded, and in my case, it is a stream of Excel content, which, sadly, the Excel Connector for Power Automate does not play nice with.

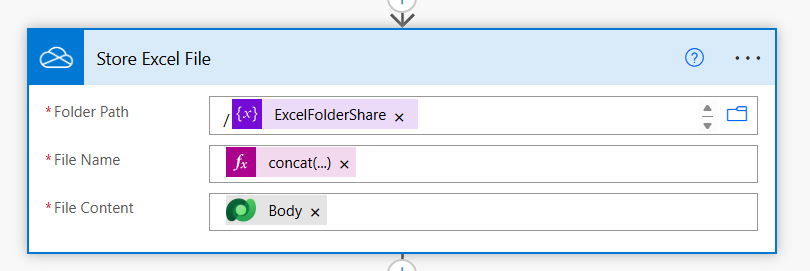

Temporarily Storing your File Stream

To get around this, you need to save the file stream somewhere, anywhere, as a physical file. In my case, I used OneDrive.

In my “concat” expression, all I’m doing is creating a unique file name for the file I am being generated.

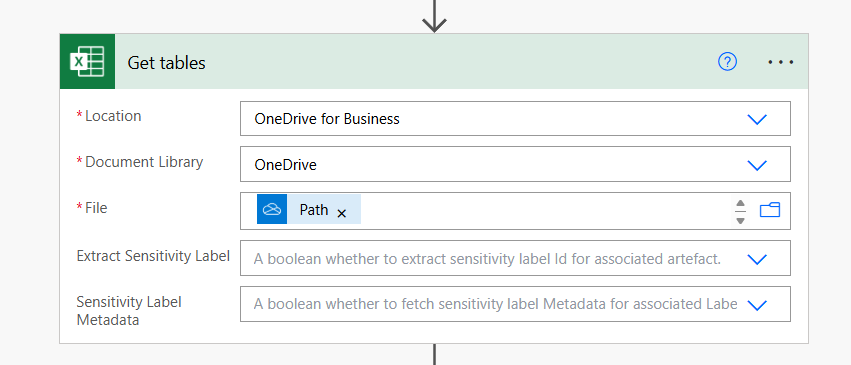

Accessing Sheets and Tables

Here is where I spent too much time figuring this out. To be able to parse data via Power Automate, you need to provide a named structure for it to work with. Excel won’t just run through rows (there is probably, most likely, a performance reason for this; otherwise, I don’t get it).

In my case, and I would imagine in most people’s cases, I don’t know what table I’m parsing at runtime, so I need to get a list of all the tables in the file I have now stored on OneDrive.

Note: If you are using OneDrive for Business, your Location and Document Library parameters will always be the same.

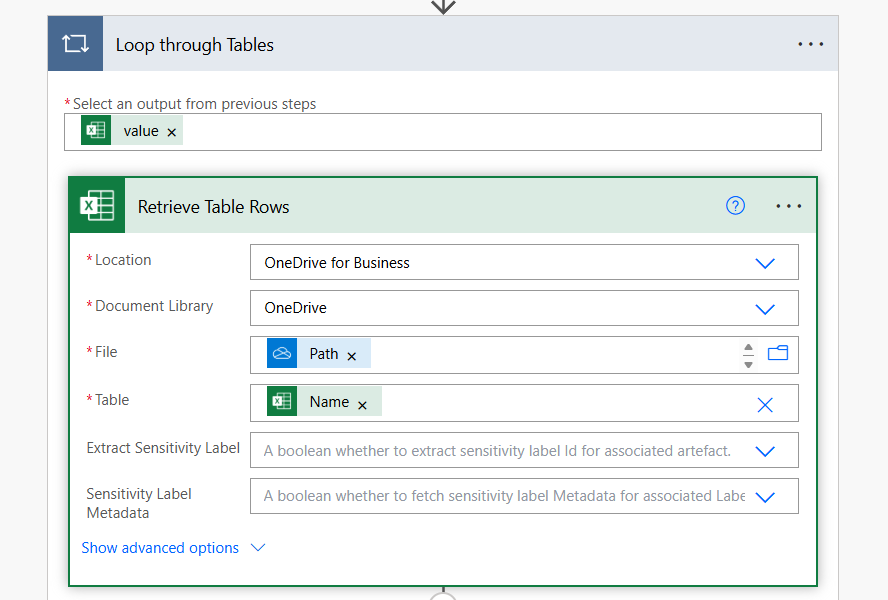

From here, I then loop through all the tables in my file (of which there is one), retrieving the name of the table I want to use, and I get to see all those wonderful rows of data.

Convert JSON and Have at It

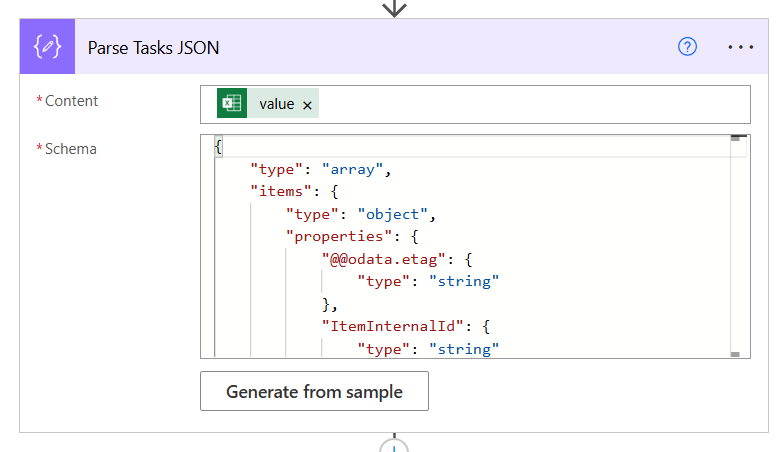

Okay, the best part about this process was when I got to see all my data returned in JSON. At this point, I was then able to copy the sample input, generate a sample schema, have those nodes exposed via Power Automate, and get to my task of parsing this data back to the Dataverse (in my scenario, I was building out a set of tasks.

This was invariably the best part about the Excel connector: the data was returned in JSON.

Great for me, I know how to code, parse JSON and JSON Schema – awesome, love it.

But it begs the question to me once more, how is doing this No Code, if I am asking someone to debug the output from the connector, save it as a file, then copy and paste, and then get access to their data? I know the initial response is going to be – “but I want my cell data, not JSON nodes”.

Alas, I digress, but once I figured out the ins and outs of parsing Excel into Power Automate and doing some magic with it, I now get where the stumbling blocks can arise.